Relativity Fest Sydney shines a spotlight on aiR for Review

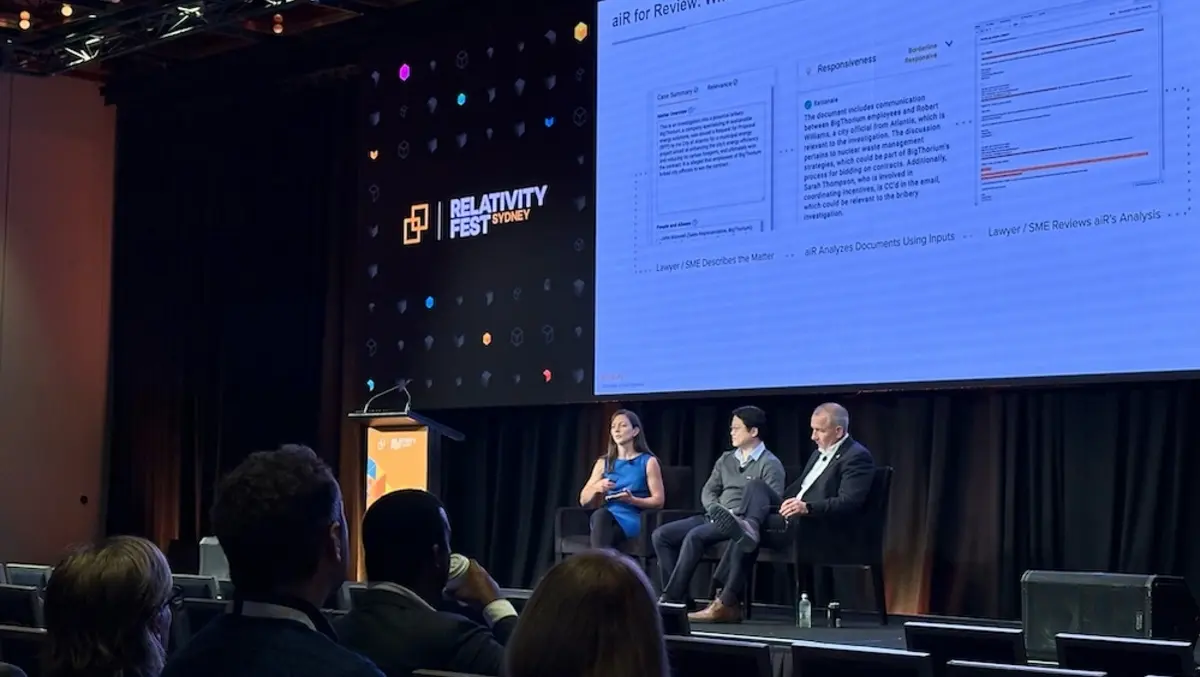

Relativity's aiR for Review was the major focus during a panel discussion at this year's Relativity Fest Sydney, where experts from both product and practice discussed how the platform is changing the way legal teams approach document review.

The session, moderated by Elise Tropiano, Senior Director of Product Management at Relativity, included insight from Michael Song, Head of GTDocs at Gilbert + Tobin, and Chris Haley, Vice President of Practice Empowerment at Relativity.

Generative AI has completely transformed the legal industry. It has demonstrated a remarkable proficiency at processing human language and comprehending material for legal tasks.

While legal practitioners are only just beginning to explore the ways these new large language models can transform their work, one opportunity has already gained traction: document review, a notoriously time-consuming and expensive process.

Relativity aiR for Review leverages powerful generative AI technology to help legal teams more efficiently and accurately conduct document review across their ever-expanding volumes of data.

Tropiano kicked off the session by describing aiR for Review as "a practical entry point for legal teams exploring generative AI."

"It's tackling familiar use cases," she said. "We're talking about reviewing for relevance, applying issue tags, and we're grounding those predictions in the document text - preventing hallucinations."

After 20 months of development, testing and client collaboration, aiR for Review is now generally available and delivering results. Tropiano noted that the platform consistently identifies at least 90% of truly relevant documents, often more.

From the outset, aiR for Review was designed to fit seamlessly into RelativityOne, integrating with active learning, search, and offering robust data privacy. "It's really that extra team you may need in a pinch," she said.

The panel explored three key use cases: quality control (QC), analysing incoming productions, and conducting first-pass reviews.

Michael Song shared how his team used aiR for Review in a high-pressure QC scenario. With only hours to spare before a deadline, traditional methods were too slow.

"We just fed that into review," he said. "And what it delivers is that really fast, near-instantaneous dashboard. It allows us to prioritise the issues we want to turn our minds to."

Song explained that aiR helped them quickly flag documents where AI and human reviewers disagreed, streamlining the process of double-checking critical material. "Even when you're reviewing the hits, the QA itself is faster. You're really honing in on what's important."

Chris Haley echoed the value of that approach, highlighting how many clients start with QC as a low-risk way to trial aiR for Review.

"You're not relying on it to make the final production call," he said. "But it's helping you make your review more accurate, whether it's a traditional linear review or a CAL-type review."

He added that early-stage QC with aiR allows teams to detect misunderstandings before they snowball.

"When there's a conflict - if aiR says it's relevant but the first-level reviewer doesn't - you've got citations right there. You don't need to read the whole document."

Tropiano also pointed out that firms often trial aiR for Review on dormant matters, comparing AI predictions against known outcomes.

"That process builds so much trust," she said. "People see that aiR would have caught the truly relevant documents."

From a return on investment perspective, Haley said the platform's efficiency offsets the additional cost.

"You make back the time savings in your QC team, especially with those more expensive second-level reviewers," he said. "You can also QC more and improve overall accuracy."

On analysing incoming productions, Song described a typical challenge: thousands of documents landing unexpectedly and a pressing need to understand what's in them.

"We started with structured analytics to reduce the set," he explained. "Then we spent a lot more time on prompt iteration. After a few samples, we asked more targeted questions."

The result was faster insights and more strategic decision-making. Different teams could quickly extract what they needed, shaping how they would proceed with review.

Haley related this to his previous experience at Troutman Pepper, recalling how shallow searches in large productions led to surprises.

"There was always a document or two that became a surprise later," he said. "So having that capability to code for issues across incoming data is super helpful."

He pointed out that the citations and rationale provided by aiR aren't just for validation - they also support downstream tasks like witness prep and motions.

"I remember showing a partner the rationale and it uncovered an issue they hadn't even considered," he said.

Song agreed, emphasising how AI adds value beyond what search terms and active learning can offer.

"With LLMs and prompting, you're giving it the space to be creative," he said. "You can surface things you didn't know about."

But the most transformative use case, the panel agreed, is using aiR for Review as a "first-pass review tool."

Song described a time-sensitive matter handled over Christmas where a rigorous prompting process was key.

"We focused a lot more on prompt iteration, recall and precision," he said. "Once we got comfortable, we applied it over the full review set, then validated it using active learning."

Song noted that this workflow, although more advanced, is still grounded in traditional methodologies. "You're still relying on defensible outcomes," he said.

Haley framed this as part of a broader shift in legal tech, one that rewards those who lean in early.

"If we lean into these moments, it can propel our industry and careers," he said. "AI is beating humans in review - consistently."

Song added a note of caution, advocating for a nuanced approach.

"With active learning, we had to level up our first-round reviewers, and it's the same with aiR," he said. "There's a critical role for experienced reviewers to help shape validation."

Haley agreed, stressing that aiR is an additional tool - not a replacement for established workflows.

"You shouldn't jettison email threading or keyword analysis," he said. "This just allows you to spend less time on the low-value tasks and more on the work that matters."

For those unsure where to begin, Song has one message: "Just get started."

"Don't agonise over which test or prompt to use first. You won't get it perfect, but once you reanalyse, you'll understand it better."