NVIDIA to build AI factories and data centres for the next industrial revolution

NVIDIA, in collaboration with leading computer manufacturers, has unveiled an extensive range of systems powered by the NVIDIA Blackwell architecture, featuring Grace CPUs and NVIDIA networking infrastructure. This initiative aims to revolutionise AI factories and data centres, driving the next wave of generative AI breakthroughs.

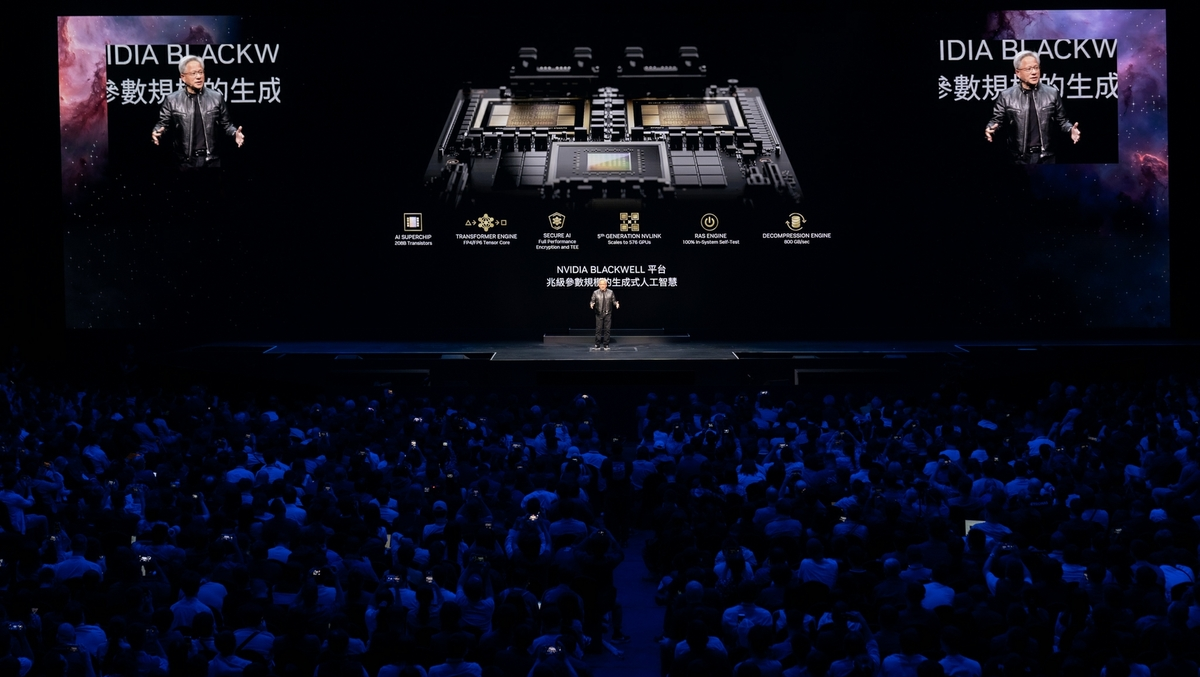

During his keynote at Computex, Jensen Huang, NVIDIA's founder and CEO, announced that ASRock Rack, ASUS, GIGABYTE, Ingrasys, Inventec, Pegatron, QCT, Supermicro, Wistron, and Wiwynn will deliver cloud, on-premises, embedded, and edge AI systems leveraging NVIDIA GPUs and networking. Huang emphasised the significance of this transition, stating, "The next industrial revolution has begun. Companies and countries are partnering with NVIDIA to shift the trillion-dollar traditional data centres to accelerated computing and build a new type of data centre – AI factories to produce a new commodity: artificial intelligence."

Diverse AI system offerings

The unveiled systems cater to various applications, ranging from single to multi-GPUs, x86 to Grace-based processors, and air to liquid-cooling technology. This versatility ensures that enterprises can choose the optimal configuration for their specific workloads.

The NVIDIA MGX modular reference design platform now supports NVIDIA Blackwell products, including the new NVIDIA GB200 NVL2 platform. This platform is designed to deliver exceptional performance for mainstream large language model inference, retrieval-augmented generation, and data processing. With high-bandwidth memory performance from NVLink-C2C interconnects and dedicated decompression engines, data processing speed is enhanced by up to 18 times, with 8 times better energy efficiency compared to x86 CPUs.

Modular reference architecture for accelerated computing

NVIDIA MGX offers a reference architecture that allows computer manufacturers to quickly and cost-effectively build more than 100 system design configurations. This approach helps slash development costs by up to three-quarters and reduces development time by two-thirds, to just six months. More than 90 systems from over 25 partners are either released or in development, leveraging the MGX reference architecture.

Both AMD and Intel are supporting the MGX architecture, planning to deliver their own CPU host processor module designs, including the next-generation AMD Turin platform and the Intel Xeon 6 processor with P-cores. These reference designs enable server system builders to save development time while ensuring consistency in design and performance.

Expanding the ecosystem

NVIDIA's comprehensive partner ecosystem includes TSMC, the world's leading semiconductor manufacturer, along with global electronics makers such as Amphenol, Asia Vital Components (AVC), Cooler Master, Colder Products Company (CPC), Danfoss, Delta Electronics, and LITEON. This collaboration aims to quickly develop and deploy new data centre infrastructure to meet the needs of enterprises worldwide.

Enterprises can also access the NVIDIA AI Enterprise software platform, which includes NVIDIA NIM inference microservices. This platform enables the creation and operation of production-grade generative AI applications.

Taiwan's adoption of Blackwell

During his keynote, Huang highlighted Taiwan's rapid adoption of Blackwell technology. Chang Gung Memorial Hospital, Taiwan's leading medical centre, plans to use the NVIDIA Blackwell computing platform to advance biomedical research and enhance imaging and language applications, ultimately improving clinical workflows and patient care.

Foxconn, one of the world's largest electronics manufacturers, intends to use NVIDIA Grace Blackwell to develop smart solution platforms for AI-powered electric vehicles and robotics platforms. This move aims to provide more personalised experiences to its customers through an increasing number of language-based generative AI services.

Jonney Shih, Chairman at ASUS, highlighted their collaboration: "ASUS is working with NVIDIA to take enterprise AI to new heights with our powerful server lineup, which we'll be showcasing at COMPUTEX. Using NVIDIA's MGX and Blackwell platforms, we're able to craft tailored data centre solutions built to handle customer workloads across training, inference, data analytics, and HPC."

Future prospects and challenges

During the press Q&A, Jensen Huang addressed various topics, including partnerships, AI advancements, and the future of NVIDIA. Responding to a question about HBM memory partners, Huang clarified, "We work with three partners – SK Hynix, Micron, and Samsung. All three of them will be providing us HBM memories. We're trying to get them qualified and adopted into our manufacturing as quickly as possible."

When asked about the rapid development pace and supply chain impacts, Huang shared, "Our chips are designed with a bunch of NVIDIA-created AIs. We need to have that, but very importantly, while other companies are having layoffs, we're growing very fast. We've had the pleasure of hiring a lot of great engineers."

Discussing the competition and AI's future, Huang remarked, "AI is the most powerful technology force of our time. It will revolutionise every industry, and NVIDIA is at the forefront of this transformation. We are providing the tools, platforms, and systems to drive AI innovation."

NVIDIA's ambitious plans, supported by a robust partner ecosystem, signify a transformative shift in the computer industry. As enterprises embrace accelerated computing and AI factories, NVIDIA's Blackwell architecture and MGX modular platform are set to play a pivotal role in shaping the future of generative AI and data centre infrastructure.

As Huang concluded, "The most important consideration is possibility. We need to be able to make it at all. The ecosystem here is incredible, and I'm very proud of all of my partners here. This new era is coming, and it's even bigger than the last ones combined."

.webp)